AGI achieved; now what?

First of all, yes. I'm calling it.

But...

[This is a discussion on AI, the future of humankind, post-labor economics and our research on achieving infinite productivity.]

Let's start by addressing what AGI — Artificial General Intelligence — means.

There is a wide range of definitions across the tech industry, but they all seem to converge into a human-level sort of artificial intelligence.

"[...] with capabilities that rival human cognitive functions"

"[...] can demonstrate human-like intelligence across multiple domains"

"[...] that can match or exceed human reasoning abilities"

Considering just intelligence, it's natural to think of it as a model. More specifically, a large language model; as those are the ones that feel intelligent today.

But here is the thing: by that definition, it's been achieved long ago. Likely with the introduction of GPT-4, given that no single human could beat it on a wide test.

Consider this thought experiment: design a test covering 20 diverse domains - quantum physics, medieval history, contract law, protein folding, Mandarin translation, statistical analysis, creative writing, etc. Now find a single human who could outperform GPT-4 on that.

In fact, let's exercise this now.

I've asked 4 questions to GPT 4.1 mini (the worst currently available model on ChatGPT), plus a double check by o3 (which is a system, not just a model — more on that below).

- Write Rust code (with unit tests) that applies SIMD-vectorized ReLU to a f32 slice using std::arch.

- Create a decision flowchart for diagnosing iron-deficiency anemia in primary care using ferritin, transferrin saturation, CRP, and colonoscopy referral thresholds.

- Prove every finite group of order p² is abelian.

- Draft a GDPR-compliant privacy notice for a wearable glucose monitor that transfers data to U.S. servers, citing legal bases and retention limits.

Can you provide better answers to all of them?

To any of them?

Can anyone?

Speaking for myself, I can barely understand the questions. Maybe a PhD could answer better... one of them.

Of course if I had an infinite amount of time and access to all human tools (like the internet), I could. But that's not what it did.

All answers came in less than 3 seconds and on the first try. It's like if you replied instantly, word by word, with no prior search, no backspace, no drafting; nothing. Just pure instant answer like you do when asked what's 2+2.

While a domain expert might beat it on their specialty, no human possesses expert-level knowledge across the breadth that current LLMs demonstrate. This isn't about peak performance in narrow domains - it's about consistent competence across unlimited domains.

Being 80% competent on everything is way more valuable than being 100% competent on one thing. By far.

Intelligence-wise, no single human beats GPT-4.

AGI has been achieved.

Right?

So why I'm not feeling the AGI?

...you'd ask me.

Or, more importantly, why do I still have to work?!

Well, let's picture what it takes to actually produce value.

Say I want to publish a book on software development, which is a topic I'm comfortable with since I've been doing it for ~15 years.

Can I do it on a single session, like the GPT examples above? Can I sit down, open my preferred text editor, and just type it word by word until it's done?

Of course not!

I need to iterate (a lot!) on it.

I'll write, re-write, research, change my mind, read it again and again, fix typos, and lot more, until it's done. And that takes time!

In fact, it's said to take something between weeks and years to write any publishable book.

Stephen King, a professional writer, strives to write 10 pages per day.

So intelligence — the ability to coherently predict the future — is not enough.

You also need a feedback loop.

So... a loop, huh?

Let's break it down.

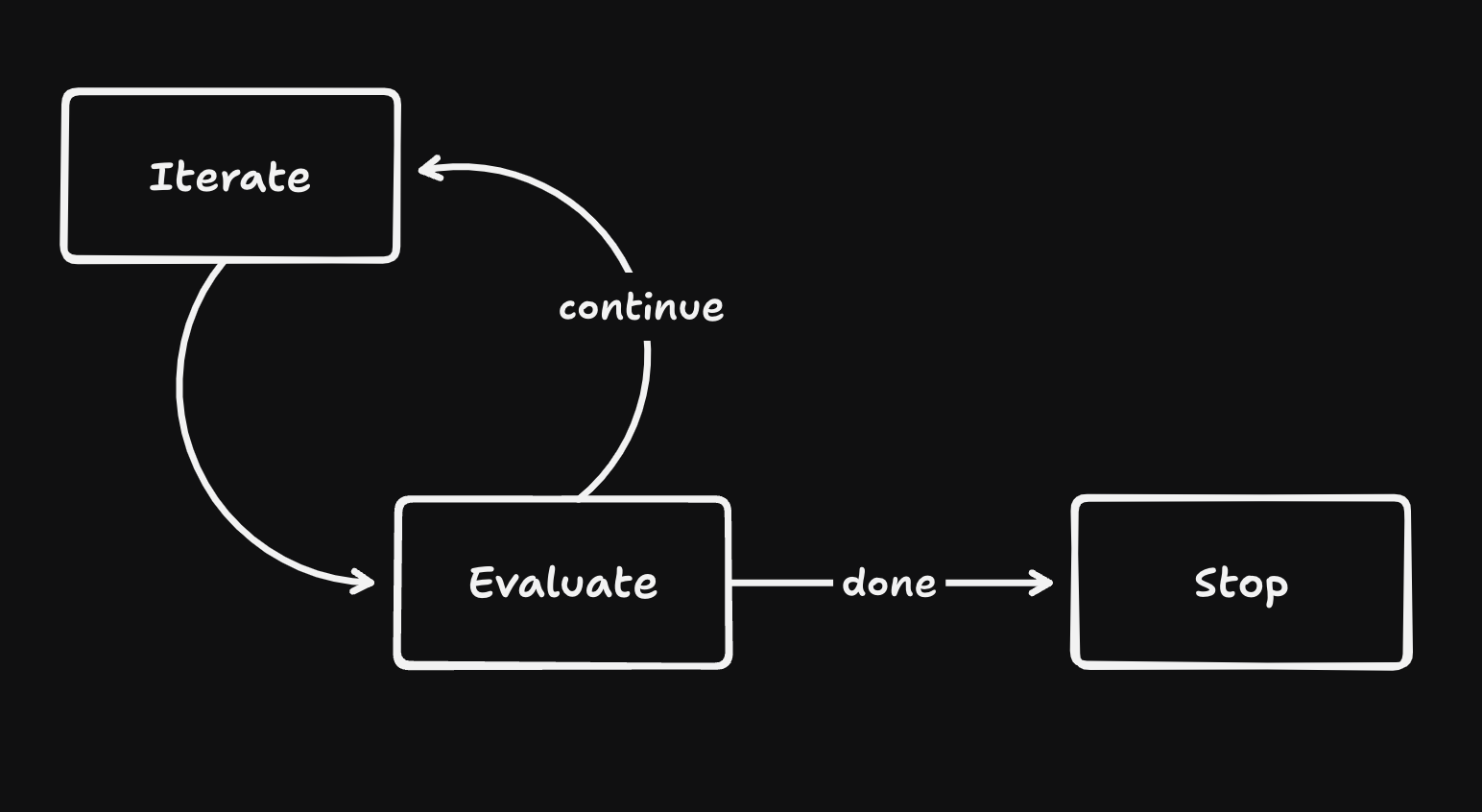

The loop is a simple concept, repeating the same action until a condition is met.

And the feedback is an evaluation of the current state — a judgement. It answers the question is the condition met?. Or, in other words should the loop stop or continue?.

If you are following AI progress, it might have rang a bell on you already, because that's what reasoning is all about. Iterating.

There are "hard" tasks, like math, where the output is either right or wrong. But that's not true for most things. Creative things!

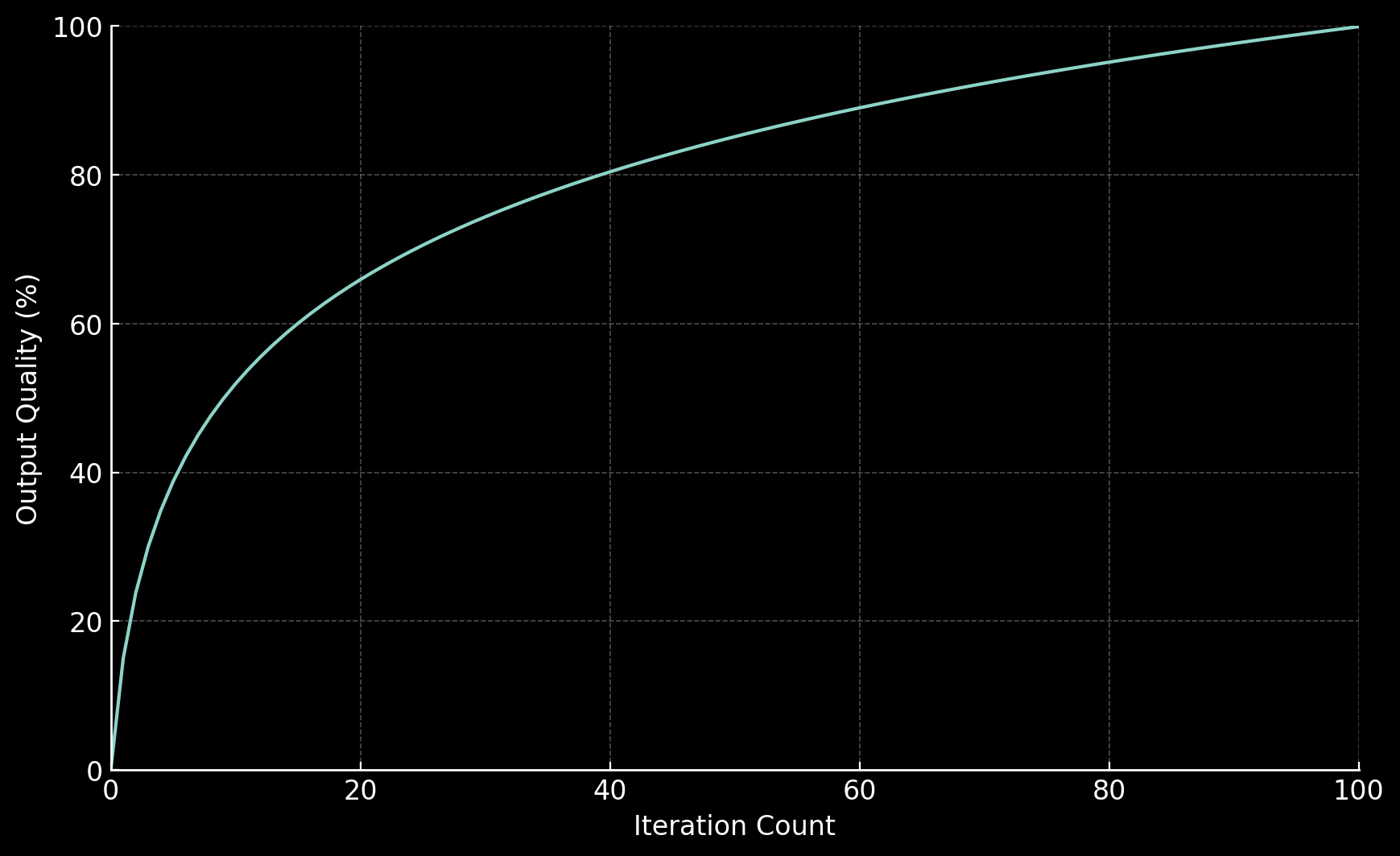

On those, the more you iterate, the better it gets. Forever, but logarithmically. You can always keep going, but the improvement for each iteration gets lower.

Think about how you write an email.

You start with a few words, read them, delete some, replace others, make it longer, then shorter. You change the tone, add details, add attachments... You essentially iterate until you evaluate it as "good enough".

Sometimes it's not as good as you'd like, but your time is constrained, so you move on to the next task.

That's also how it works for AIs.

OpenAI's o1, announced in Sep. 2024, was the first model to introduce a feedback loop, along with the idea of a "thinking budget" — the analog of your time on the example above.

Since then, all major LLM providers have introduced "reasoning models", setting it as an industry standard.

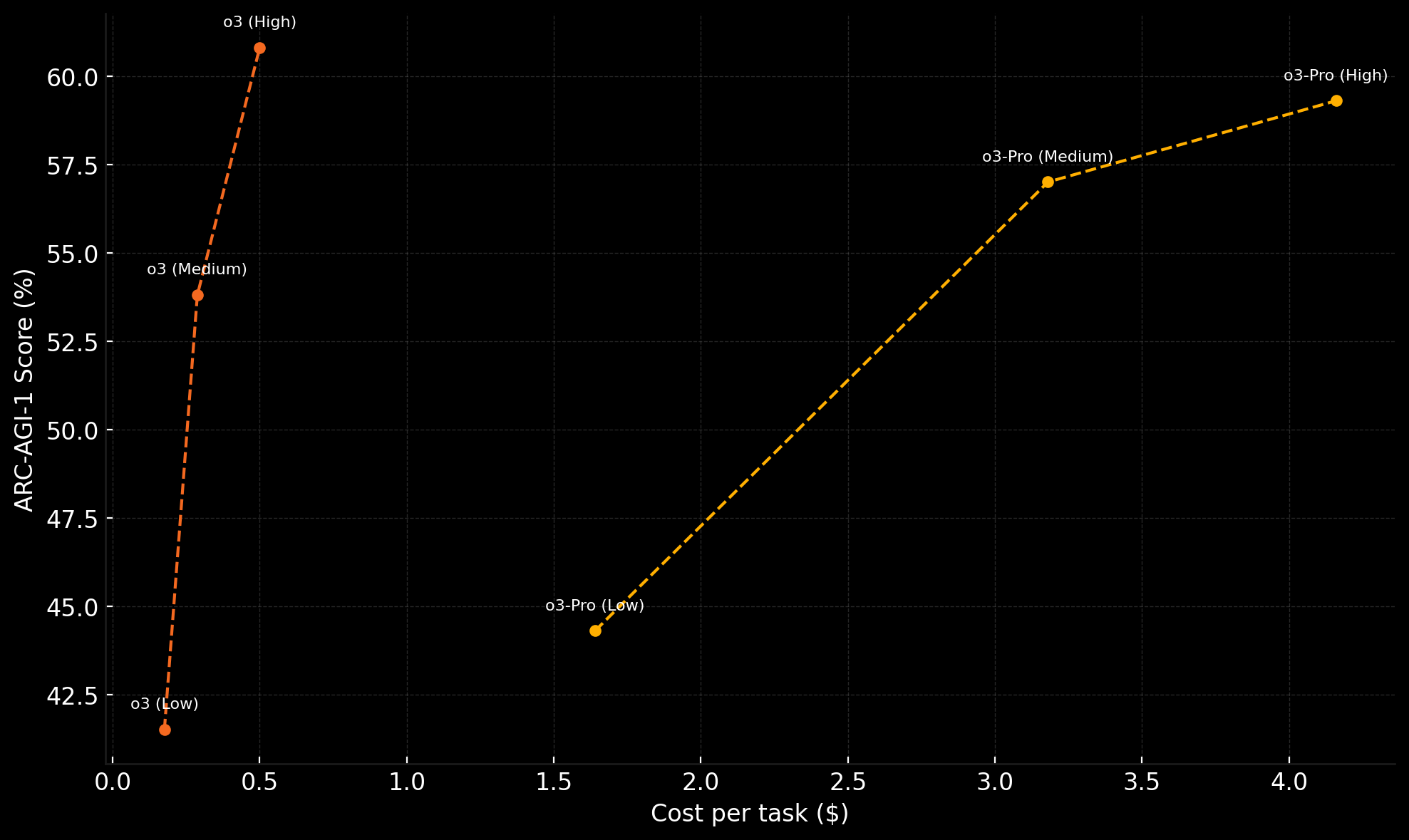

Those are o3 (lastest OpenAI model) results on a very popular benchmark for AGI — ARC-AGI-1. Note that adding more budget results in a better score, but each step improves it less — increasingly expensive, never capped.

In other words, unlimited intelligence.

It's that simple, but keep in mind that "unlimited intelligence" is just as limited as your wallet, as it gets exponentially more expensive.

I should also mention that there is a secondary hidden cost: time — the ultimate nature currency! So from now on, I'll refer to the combined cost of time and money as energy.

Until AI does my bookkeeping and files my taxes I don't give a shit about it.

— @AtomicChild September 26, 2024

We're getting there. Stay with me.

Productivity is what matters

Benchmarks, like ARC-AGI-1, are a set of tests to evaluate and compare models performance.

Pretty much like how we test humans, they're usually performed with no access to external tools or help of any kind.

It's the equivalent of sitting down with a pen and paper. You can reason as much as you want (within the test limits), but you can't reach for a calculator or the internet or anyone else.

While it makes sense for comparison, benchmarks are not a good representation of what a system can produce (they're testing models, not systems, after all). Again, similarly to how one can do great on a test but fail miserably on an actual job.

When facing a real problem in the real world, you should definitely use tools. Lots of them.

Tools, as a form of technology, are really just knowledge — which can be produced by iterating on a task.

How it goes for solving X:

- "To solve X I'm going to do Y" —

reasoning - Do Y. —

iterating - Check if Y solved X. —

evaluating - Store knowledge on if Y solves X or not. —

learning - If not solved, start over (including the new knowledge). —

looping

When using a tool, you're essentially interfacing with someone else's knowledge, which was produced with their intelligence + their energy on lots of iterations, and served to you with most of the complexity abstracted away.

In fact, when you use a calculator, you're indirectly building on top of the previous assembling work to build it, plus the logistics to ship it, plus the engineering to design such tool, plus the mathematical principles observed and documented through centuries before those, and so on.

Knowledge is the outcome of iterating on a problem, regardless of the result.

AI systems — again very similarly to humans — can massively benefit from having access to humankind knowledge. Be it packed as a tool, a service, a skill, a piece of text, an image, a link, ... it doesn't matter. More knowledge translates into more productivity, meaning the system is capable of producing more value.

Today, any modern LLM, from any provider, has intelligence enough to use any tool — or to learn how to use any tool through a feedback loop. Analogous to learning how to drive by just driving.

A very interesting example is playing games. The 3 most popular models are playing Pokémon right now, with no prior instructions on how the game works, it's mechanics nor it's goals.

All of them have demonstrated being able to finish the game using nothing but their intelligence, a feedback loop and tools such as moving and taking notes — very similar to how a human would do it.

More details on their respective twitch channels:

When we think of biological intelligences (homo sapiens), we are used to believe that virtually anything is achievable.

I mean... I could do open heart surgery. Or design a reusable rocket. Or surf a monster wave.

I could go through college, specialization, residency, training... and, in about 10 years, perform such surgery and save a life.

I could, as anyone could — given enough energy and access to humankind knowledge.

Similarly, an AI system could can.

Feed it with a task, proper tools and enough energy, put it on a loop, and it'll eventually get there. No task is impossible.

From that point on, we should not be discussing if AI can achieve this or that, but instead if it's viable.

The cost situation

I could start with an elaborate argument on how the cost per unit of intelligence is already much lower on artificial intelligences than on biological ones, but it's not worth your time, given that inference cost is very quickly trending towards zero.

What you should know:

- You can run powerful models on your own hardware. Today.

- The cost to use AI falls about 10x every 12 months. It got 1000x cheaper in 3 years. (suck it, Moore)

- Many companies are building specialized inference hardware, leading to faster and cheaper services.

- Open-source models are constantly serving Pareto efficiency, relative to state-of-the-art models.

- AI will ultimately render money out of value, so let's not overthink this 😄

Considering all that, any use cases that are not yet viable, will be very very soon.

"Too cheap to meter", as they say.

It is happening!

Whether I was able to convince you that current models are AGI or not, it doesn't really matter because it is happening!

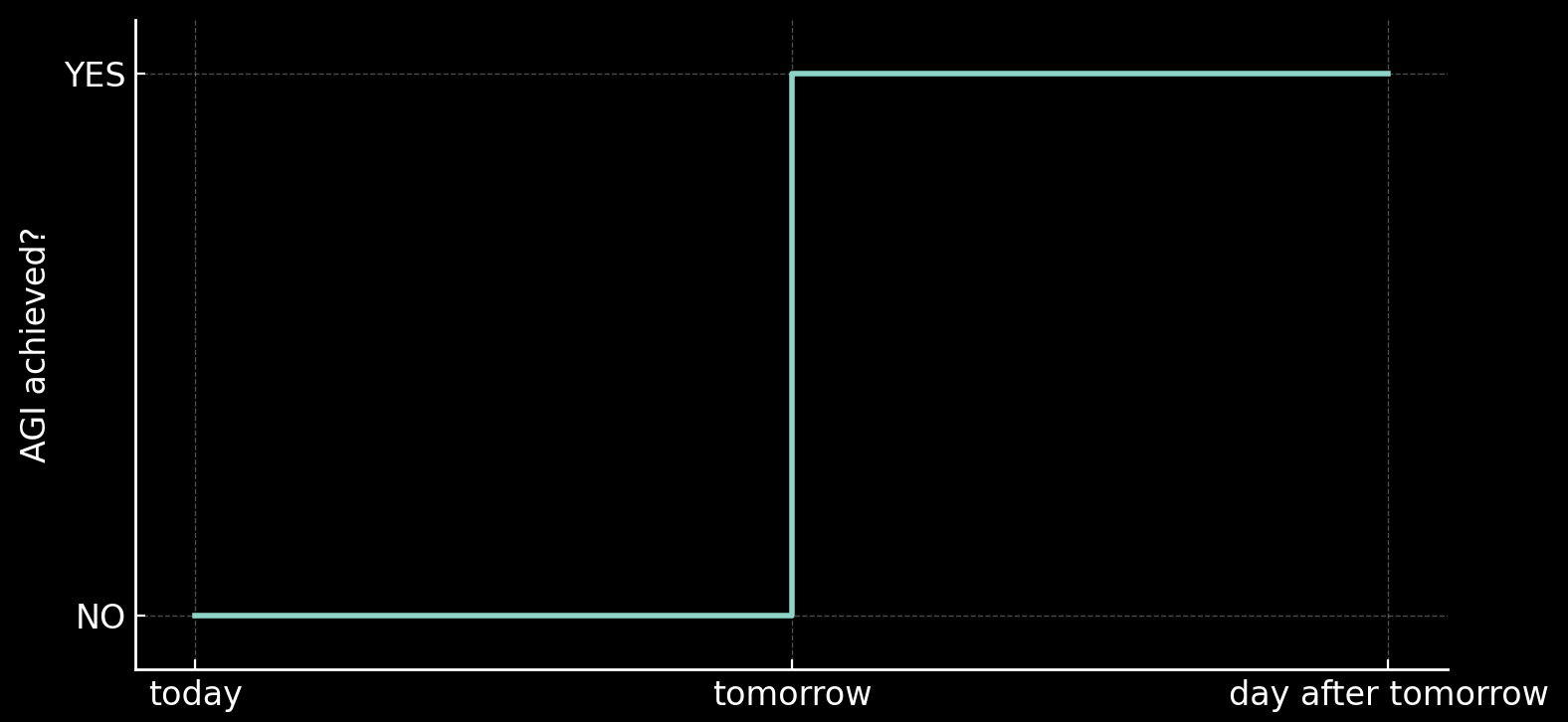

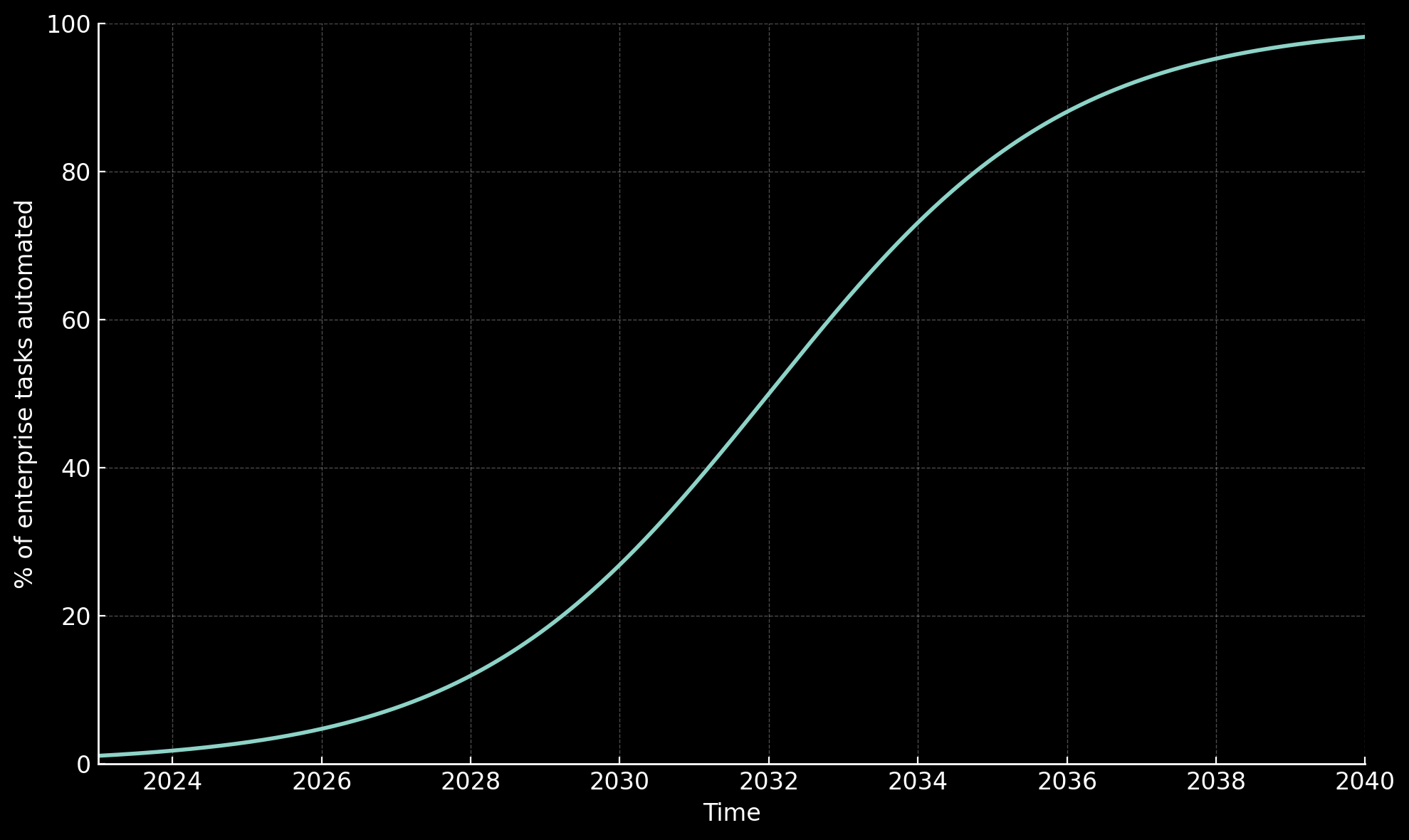

If you're waiting for a single, dramatic "AGI moment", you're ngmi you'll miss the real story. AGI isn't a switch that flips — but rather a continuum process that's happening right now — everywhere, every second.

It doesn't look like this:

But like this:

Every layer of every industry is getting unimaginable and unprecedented efficiency gains, and that's awesome!

The best way to observe this effect right now is in the software industry. The energy required to develop and maintain almost any kind of software has dropped by ~80% in the last two years. I'll say it again: building software is 80% CHEAPER TODAY THAN IT WAS TWO YEARS AGO. That's not an exaggeration, specially for consumer software.

It doesn't matter if there is a human in the loop or not. It doesn't matter how many engineering jobs were lost or not. It doesn't matter if you directly benefit from it or not.

Just one thing matters: building software is getting much cheaper, and that's good for everybody, as that translates into more iterations, more features, more products, more libraries, more services, more value. For everyone.

Coding is among the first industries to collect those gains, but not because it's easy, but due to:

- Being mostly digital, making it easy to interface existing tools to AI.

- Having a high aggregated value, making it worth even with today's inference prices.

- Most industries run on software, making it universal. You can solve an untrackable and ever-growing amount of challenges with code.

A few examples of products driving such efficiency gains are Cursor, Claude Code, Codex and many others. All of them work in a similar fashion to what we have described so far.

But it's not stopping with computer programs. Every production chain, every supply chain, every good or service being provided is already exponentially collecting those gains.

ChatGPT, when paired with o3 or o4-mini, also works similarly. During it's Chain of Thoughts, it does way more than just reasoning. It searches the web, write and run code, zoom into images and much more. You can even plug in your own tools via MCP, which is another project allowing knowledge sharing among apps, and has been gaining a lot of traction! That's why o3 is a system, not just a model.

Think with me. The highest cost on most industries is human labor, meaning that every human-second saved by using an AI system, from a simple copy/paste from/into ChatGPT up to a fully automated swarm of agents, saves energy and will eventually translate into cheaper and better products.

As a software engineer, I can produce 5x more value per energy today than I could 2 years ago. Mostly due to my direct usage of AI, but also due to everyone else's usage of AI. Every open-source library we rely on is evolving faster than ever. Every tool I use is getting better every week. And so on.

To better visualize this process, I highly suggest thinking more of tasks and less of roles.

There won't be a day where AI is suddenly capable of replacing your role entirely, but the tasks you do to fulfill your job are quickly getting cheaper and better. Picture how ~everyone has AI assistancy to write e-mails and documents, or how ~everyone have near-perfect translation for ~every language on earth. That affects everyone everywhere, and compounds.

"They're not coming for me though, lol"— my barber

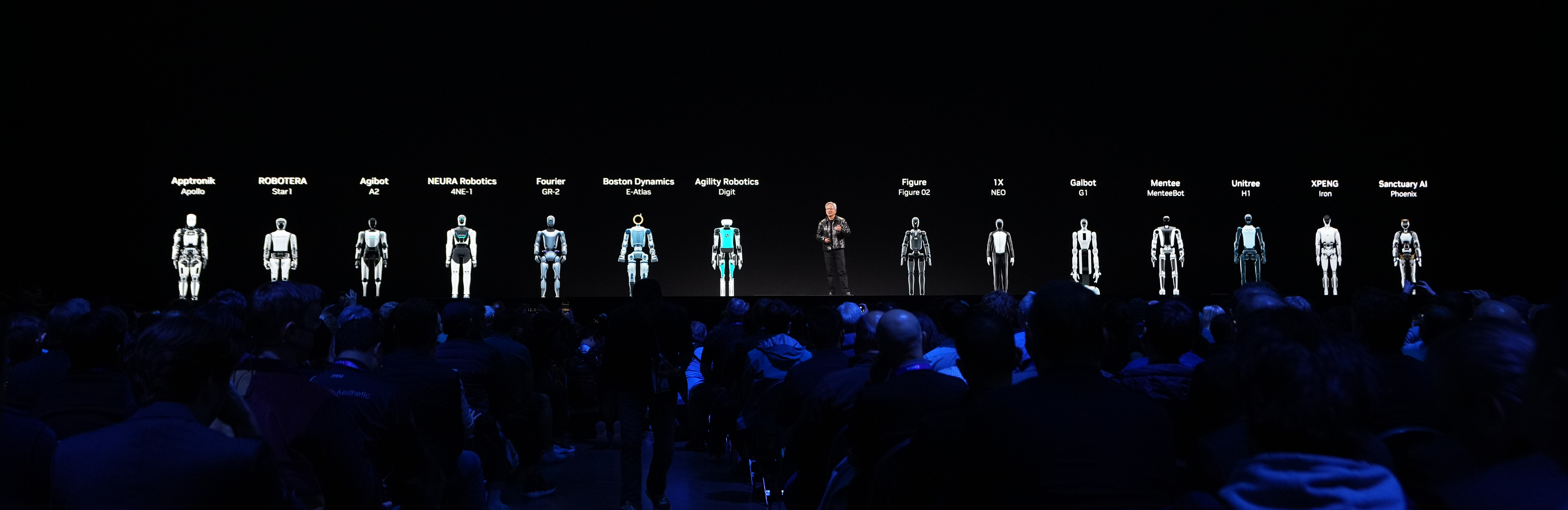

This is Jensen Huang, CEO of Nvidia, showing just a few of the humanoid robots being developed.

This is Jensen Huang, CEO of Nvidia, showing just a few of the humanoid robots being developed.

Sometimes an image is worth a million words.

As I said, every industry.

What now?

I hope that at this point you're convinced that it's happening, and that there's no turning back; which leads to a lot of questions like "What will happen when humans are no longer employable?" — and those are really important questions.

For thousands of years, survival, status, and meaning flowed from productive contribution. Every major social form encoded this. Even our earliest hunter-gatherer ancestors organized their entire social structures around work divisions.

Before I tell you what I think will happen, I must tell you that this is extremely hard to predict. Impossible, to be honest. There are so many variables, so many interactions, so many actors, that no one can tell you exactly what will happen, much less when. Not even the most brilliant minds can.

What I can tell you is that I'm extremely optimistic.

I believe that we'll live in a world where humans are free from boring work. One where you don't have to labor for a living, and are free to "work" on whatever you want.

Nature seem to have this intrinsic property of finding equilibrium. From the most fundamental particles to the most complex inter-galactic systems, it's always trending towards balance, and I don't think it's different here.

One very interesting way to describe this future is: artificial and biological intelligences are merging.

Humans already function as a single network by orchestrating our behavior through communication. Everything and everyone are connected.

No single meaningful achievement from humankind would be doable alone. There is no internet without the computers. No computers without electronic components. No electronic components without a deep understanding of electricity and physics, and so on. All the way up to the first languages, around ~100000 years ago. Many generations of humans iterating on their own issues, collaborating for a better future, compounded.

Collaboration. That's the key.

It's what enables us to enter a +100 ton pressurized tin and cruise the planet as if it were the most natural thing in the world, or to speak live with a human orbiting 400km above us, or whatever else blows your mind.

It's the ability to represent, understand and disclose complex ideas, through language, that makes us powerful.

Now, AI is joining that network.

A new, tireless, infinitely replicable new form of intelligence is iterating together with us — rather than replacing us — and that will lead humankind to infinite productivity.

If we were capable of seeing the society just 50 years from now, we'd think they are gods. Not much different than what a person from 1000 years ago would think of us today, but on a much shorter timescale.